When one builds a product, a good measure of success would not be how much time users spend on the product, but how much time users save by using it. Let search be at the core of any product for that purpose.

Three years ago, I started Crisp with Baptiste Jamin. We had very little means (and zero money!) at that time. We managed to deliver our cross-platform customer software to 100,000 happy users in a cost-efficient way.

Certain of those Crisp users have received millions of customer support messages in their Crisp Inbox over the year. They also usually host large CRMs with tens of millions of contacts in it. Needless to say: those users want to be able to make sense out of all this data, through search. They want their search to be fast, they want reliable results.

As of 2019, Crisp hosts north to half a billion objects (cumulated: conversations, messages, contacts, helpdesk articles, etc.). Indexing all those objects using traditional search solutions would be costly both in RAM and disk space (we've tried an SQL database in FULLTEXT mode to keep things light compared to Elasticsearch, and it was a no-go: huge disk overhead and slow search). As Crisp has a freemium business model, it means that we need to index a lot of data for a majority of users that does not pay for the service.

As our main focus was on building a great product, we unfortunately never had much time to focus on a proper search system implementation. Until now, search in Crisp was slow and not super-reliable. Users were complaining a lot about it — rightfully so! We did not want to build our reworked search on Elasticsearch, based on our past experience with it (it eats up so much RAM for so little; which is not scalable business-wise for us).

This was enough to justify the need for a tailor-made search solution. The Sonic project was born.

What is Sonic?

Sonic can be found on GitHub as Sonic, a Fast, lightweight & schema-less search backend.

Quoting what Sonic is from the GitHub page of the project:

Sonic is a fast, lightweight and schema-less search backend. It ingests search texts and identifier tuples, that can then be queried against in microseconds time.

Sonic can be used as a simple alternative to super-heavy and full-featured search backends such as Elasticsearch in some use-cases.

Sonic is an identifier index, rather than a document index; when queried, it returns IDs that can then be used to refer to the matched documents in an external database.

Sonic is built in Rust, which ensures performance and stability. You can host it on a server of yours, and connect your apps to it over a LAN via Sonic Channel, a specialized protocol. You'll then be able to issue search queries and push new index data from your apps — whichever programming language you work with.

Sonic was designed to be fast and lightweight on resources. Expect it to run on very low resources without a glitch. At Crisp we use Sonic on a $5/mth DigitalOcean SSD VPS, which indexes half a billion objects on 300MB RAM and 15GB of disk space (yeah).

Sonic lets anyone build such a real-time search system:

Overview of Sonic

Project Philosophy

Since I first tried Redis, it's been an easy-going love-story. Redis is great, Redis is fast and Redis plays nicely on any server. That's for the world of key-value storage.

If you are hunting for a search backend which is open-source, lightweight and developer-friendly, there's nothing on the market except behemoths. The world needs the Redis of search.

With this finding in mind, the Sonic project was born.

All decisions regarding the design of Sonic must pass the test of:

- Is this feature really needed?

- How can we make it simple?

- Is Sonic still fast and lightweight with it?

- Is configuring Sonic getting harder with that new shiny thing?

On the choice of the programming language:

Sonic has to be built in a modern, well-thought programming language that is able to produce a compiled binary. Running Sonic with a GC (Garbage Collector) is also a no-go, we need real-time memory management for this kind of project. The language satisfying all those constraints is Rust.

On maximizing future outcomes for the project:

In order to maximize the value of the software for everyone, open-source contributions should be made accessible, even for non-Rust specialists. For instance, adding new stopwords for your spoken language should be easy. This asks for the source code to be well-documented (ie. profusion of comments through the code), and neatly structured (ie. if I'm looking for the part responsible of stopwords, I know where to look).

The parts of Sonic that make it easy to setup & use:

- Sonic is schema-less (same as Redis). There is no need to import schemas before you can start pushing and querying in the index. Just push unstructured text data to a collection & bucket, then query the index later with text data. If there is a need to push a vector of text entries (that could be constrained by a schema), simply concatenate non-null entries and push the result text to the index.

- Spinning up a Sonic instance is as easy as: copying the default configuration file, passing a data storage directory to Sonic and then booting it up. Takes no more than 10 seconds of your time.

Features & Benefits

Sonic implements the following features:

- Search terms are stored in collections, organized in buckets; you may use a single bucket, or a bucket per user on your platform if you need to search in separate indexes.

- Search results return object identifiers, that can be resolved from an external database if you need to enrich the search results. This makes Sonic a simple word index, that points to identifier results. Sonic doesn't store any direct textual data in its index, but it still holds a word graph for auto-completion and typo corrections.

- Search query typos are corrected if there are not enough exact-match results for a given word in a search query, Sonic tries to correct the word and tries against alternate words. You're allowed to make mistakes when searching.

- Insert and remove items in the index; index-altering operations are light and can be committed to the server while it is running. A background tasker handles the job of consolidating the index so that the entries you have pushed or popped are quickly made available for search.

- Auto-complete any word in real-time via the suggest operation. This helps build a snappy word suggestion feature in your end-user search interface.

- Full Unicode compatibility on 80+ most spoken languages in the world. Sonic removes useless stop words from any text (eg. 'the' in English), after guessing the text language. This ensures any searched or ingested text is clean before it hits the index; see languages.

- Networked channel interface (Sonic Channel), that let you search your index, manage data ingestion (push in the index, pop from the index, flush a collection, flush a bucket, etc.) and perform administrative actions. The Sonic Channel protocol was designed to be lightweight on resources and simple to integrate with (the protocol is specified in the sections below); read protocol specification.

- Easy-to-use libraries, that let you connect to Sonic Channel from your apps; see libraries.

Oh, and as extra benefits of the technical design choices you get:

- A GDPR-ready search system: when text is pushed to the index, Sonic splits sentences in words and then hashes each word, before they get stored and linked to a result object. Hashes cannot be traced back to their source word, you can only know which hash form a sentence together, but you cannot re-constitute the sentence with readable words. Sonic still stores non-hashed legible words in a graph for result suggestions and typo corrections, but those words are not linked together to form sentences. It means that the original text that was pushed cannot be guessed by someone hacking into your server and dumping Sonic's database. Sonic helps in designing "privacy first" apps.

- Reduced data dissemination: Sonic does not store nor return matched documents, it returns identifiers that refer to primary keys in another database (eg. MySQL, MongoDB, etc.). Once you get the ID results from Sonic for a search query, you need to fetch the pointed-to documents in your main database (eg. you fetch the user full name and email address from MySQL if you built a CRM search engine). Data stores synchronization is known to be hard, so you don't have to do it at all with Sonic.

Limitations & Trade-offs

In any specialized & optimized technology, there are always trade-offs to consider:

- Indexed data limits: Sonic is designed for large search indexes split over thousands of search buckets per collection. An IID (ie. Internal-ID) is stored in the index as a 32 bits number, which theoretically allow up to ~4.2 billion objects to be indexed (ie. OID) per bucket. We've observed storage savings of 30% to 40%, which justifies the trade-off on large databases (versus Sonic using 64 bits IIDs). Also, Sonic only keeps the N most recently pushed results for a given word, in a sliding window way (the sliding window width can be configured).

- Search query limits: Sonic Natural Language Processing system (NLP) does not work at the sentence-level, for storage compactness reasons (we keep the FST graph shallow as to reduce time and space complexity). It works at the word-level, and is thus able to search per-word and can predict a word based on user input, though it is unable to predict the next word in a sentence.

- Real-time limits: the FST needs to be rebuilt every time a word is pushed or popped from the bucket graph. As this is quite heavy, Sonic batches rebuild cycles and thus suggested word results may not be 100% up-to-date.

- Interoperability limits: Sonic Channel protocol is the only way to read and write search entries to the Sonic search index. Sonic does not expose any HTTP API. Sonic Channel has been built with performance and minimal network footprint in mind.

- Hardware limits: Sonic performs the search on the file-system directly; ie. it does not fit the index in RAM. A search query results in a lot of random accesses on the disk, which means that it will be quite slow on old-school HDDs and super-fast on newer SSDs. Do store the Sonic database on SSD-backed file systems only.

Test Sonic in 5 Minutes

Configuring a Sonic instance does not take much time. I encourage that you follow this quick-start guide to get an idea of how Sonic can work for you. You only need Docker, NodeJS and a bit of JavaScript knowledge (no need to be a developer!).

1. Test Requirements

Check that your test environment has the following runtimes installed:

- Docker (latest is better)

- NodeJS (version 6.0.0 and above)

I also assume you are running MacOS. All paths for this test will be MacOS paths. If you are running Linux, you may use your /home/ instead as test path. For a permanent deployment, you would use proper UNIX /etc/ configuration and /var/lib/ data paths.

If you are not willing to use Docker to run Sonic, you can try installing it from Rust's Cargo, or compile it yourself. This guide does not detail how to do that, so please refer to the README if you intend to do things your own way.

2. Run Sonic

Notice: the Sonic version in use there is v1.2.0. You may change this if there is a more recent version of Sonic when you read this.

2.1. Start your Docker daemon, then execute:

docker pull valeriansaliou/sonic:v1.2.0

This will pull the Sonic Docker image to your environment.

2.2. Initialize a Sonic folder for our tests:

mkdir ~/Desktop/sonic-test/ && cd ~/Desktop/sonic-test/

2.3. Pull the default configuration file:

wget https://raw.githubusercontent.com/valeriansaliou/sonic/master/config.cfg

This will download Sonic default configuration.

2.4. Edit your configuration file:

- Open the downloaded configuration file with a text editor;

- Change

log_level = "error"tolog_level = "debug"; - Change

inet = "[::1]:1491"toinet = "0.0.0.0:1491"; - Update all paths matching

./data/store/*to/var/lib/sonic/store/*;

2.5. Create Sonic store directories:

mkdir -p ./store/fst/ ./store/kv/

These two directories will be used to store Sonic databases (KV is for the actual Key-Value index and FST stands for the graph of words).

2.6. Run the Sonic server:

docker run -p 1491:1491 -v ~/Desktop/sonic-test/config.cfg:/etc/sonic.cfg -v ~/Desktop/sonic-test/store/:/var/lib/sonic/store/ valeriansaliou/sonic:v1.2.0

This will start Sonic on port 1491 and bind localhost:1491 to the Docker machine.

2.7. Test the connection to Sonic:

telnet localhost 1491

Does it open a connection successfully? Do you see Sonic's greeting? (ie. CONNECTED <sonic-server v1.2.0>). If so, you can continue.

3. Insert Text in Sonic

3.1. Start by creating a folder for your JS code:

mkdir ./scripts/ && cd ./scripts/

3.2. Add a package.json file with contents:

{

"dependencies": {

"sonic-channel": "^1.1.0"

}

}

3.3. Create an insert.js script with contents:

var SonicChannelIngest = require("sonic-channel").Ingest;

var data = {

collection : "collection:1",

bucket : "bucket:1",

object : "object:1",

text : "The quick brown fox jumps over the lazy dog."

};

var sonicChannelIngest = new SonicChannelIngest({

host : "localhost",

port : 1491,

auth : "SecretPassword"

}).connect({

connected : function() {

sonicChannelIngest.push(

data.collection, data.bucket, data.object, data.text,

function(_, error) {

if (error) {

console.error("Insert failed: " + data.text, error);

} else {

console.info("Insert done: " + data.text);

}

process.exit(0);

}

);

}

});

process.stdin.resume();

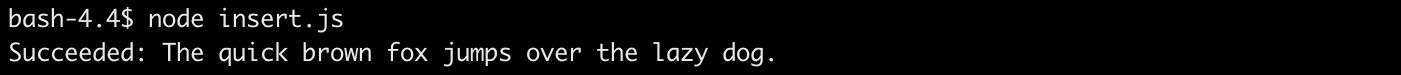

3.4. Install and run your insert script:

npm install && node insert.js

You should see the following if your insert succeeded:

4. Query Your Index

4.1. Create a search.js script with contents:

var SonicChannelSearch = require("sonic-channel").Search;

var data = {

collection : "collection:1",

bucket : "bucket:1",

query : "brown fox"

};

var sonicChannelSearch = new SonicChannelSearch({

host : "localhost",

port : 1491,

auth : "SecretPassword"

}).connect({

connected : function() {

sonicChannelSearch.query(

data.collection, data.bucket, data.query,

function(results, error) {

if (error) {

console.error("Search failed: " + data.query, error);

} else {

console.info("Search done: " + data.query, results);

}

process.exit(0);

}

);

}

});

process.stdin.resume();

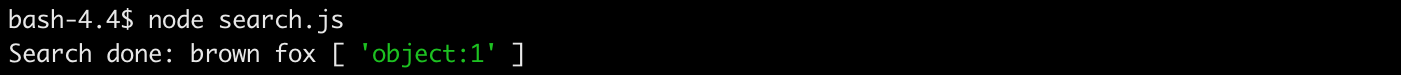

4.2. Run your search script:

node search.js

You should see the following if your search succeeded:

5. Go Further

Now that you know how to push and query objects in the search index, I invite you to learn more on what you can do on: node-sonic-channel. You may an alternate library for your programming language on the Sonic integrations registry.

Advanced users might also be interested in the Sonic Channel Protocol Specification document. You could easily implement the raw Sonic Channel protocol in your apps via a raw TCP client socket, if there is no library yet for your programming language.

Implementation Concepts

The following implementation concepts are quick notes that can help anyone understand how Sonic works, if he or she intends to modify Sonic's source code, or build their own search index backend from scratch. I did not get into the "gory" details there purposefully.

1. On The Index

A search engine is nothing more than a huge index of words; which we call an inverted index. Words map to objects, which are indeed search results.

Sentences that are ingested by the indexing system are split into words. Each word is then stored in a key-value store where keys are words, and values are indexed objects. The indexed object the word points to is added to the set of other objects that this word references (a given word may point to N objects, where N is greater than or equal 1).

Once someone comes with a search query, the query is split into words. Then, each word gets looked up in the index separately. Object references are returned for each word. Finally, all references are aggregated together so that the final result is the algebraic intersection of all world's objects.

For instance, if a user queries the index with text "fox dog", it will be understood as "send me all objects that got indexed with sentences that contain both 'fox' AND 'dog'"; so the index will lookup 'fox' in the index and find objects

[1, 2, 3]and lookup 'dog' and find objects[2, 3]. Therefore, the result for the query is[2, 3], which is the intersection of the set of object we got for each lookup result.

2. On Cleaning User Input

Of course, user input is often full of typing mistakes (typos) and words that don't really matter (we call them stopwords; ie. words like the, he, like for English). Thus, user input needs to be cleaned up. This is where the lexer comes into play (see definition). The lexer (or tokenizer) works by taking in a sequence of words (ie. sentences), and then outputting clean words (tokens). The lexer is capable of knowing when to split the sentence as to get individual words, removing gibberish parts, eluding stopwords and normalizing words (eg. remove accents and lower-case all characters).

In order to perform a proper text lexing, the system first needs to understand which language a text is written in. Is it English? French? Chinese? This is especially important for the stopwords eluding part, as every language uses a different set of stopwords. To guess that, we use a technique called ngram, and especially trigrams. The longer the input text is, the more reliable locale detection via trigrams gets. For scripts like Latin that are used by a wide range of languages, this is necessary. For the Mandarin script, we don't need this as it is used by a single language group: Chinese.

3. On Correcting Input Mistakes

As humans all make mistakes, correcting typos is a nice thing to have. To correct typos, we need to search all words for likely alternate words for a given word (eg. user enters 'animol' while he meant 'animal'). A perfect data structure to do this is an ordered graph. We use what's called an FST (a Finite-State Transducer). Using that, we are capable of correcting typos using the Levenshtein distance (ie. find the alternate word with the lowest distance) and prefix matching (ie. find words that have a suffix for a common prefix; eg. 'ani' would map to 'animal').

With all those base concepts, one can build a proper search index. The concepts described there are exactly what Sonic builds on.

Closing Notes

In building Sonic, we hope that our Crisp users will save time and find the data they are looking for. Sonic has been deployed on all Crisp products and is now used as the sole backend for all our search features. This ranges from Crisp Inbox to Crisp Helpdesk. Yet, there are still so many existing features we could improve with search!

In releasing it to the wider public as open-source software, we want to provide the community with a missing piece in the "build your own SaaS business" ecosystem: the Redis of search. It addresses an age-old itch; I can't wait to see what people will build with Sonic!

I will start adding new useful features to Sonic very soon. Those features are already on the roadmap. You can check the development milestones on GitHub to see what's next.

As of March 2019, Sonic is used in production at Crisp to index half a billion objects across all Crisp's products. After facing weird & pesky production bugs and initial design issues, which we quickly fixed, Sonic is now stable and able to handle all of Crisp's search + ingestion load on a single $5/mth cloud server, without a glitch. Our sysadmins love it.

🇫🇷 Written from Nantes, France.