I’ve recently migrated both Prose server and my personal server over to Nomad. Services such as websites are now running in the Nomad cluster as independent Docker containers, which are proxied to by a NGINX load balancer also running in the cluster.

Once the migration to Nomad was completed, I was looking for an easy way to trigger automatic updates of my running services, since the recommended way to update a Nomad job is to modify its HCL job file and update the Docker image tag.

The image update process is quite tedious in practice, since it implies releasing the Docker image to your container registry (probably automated using a CI/CD pipeline), and then asking your Nomad cluster to pull that image you just released (this step is to be done by hand by editing your Nomad job file and submitting the job update to your Nomad cluster). Wouldn’t there be a better way?

After searching Nomad’s documentation and communities on the matter, and being familiar with Kubernetes, I was looking for a Nomad CLI command similar to Kube’s:

kubectl set image deployment/my-deployment mycontainer=myimage

Alas, I realized there was no other way to request an image update with Nomad, than to manually edit the job HCL file. We’ll therefore be looking to hack around this manual job edit process to automate image update rollouts from Nomad’s CLI.

1. Nomad variables to the rescue

Nomad provides a way to store variables, and use them in various places in job files. Variables can be read and modified. If modified, any job using the modified variable can be re-evaluated by the control plane and trigger updates of running containers.

Let’s say we have the following Nomad job file for prose-web, which runs Prose’s website (I reduced it to its essential parts for this example):

job "prose-web" {

type = "service"

group "prose-web" {

count = 1

task "prose-web" {

driver = "docker"

config {

image = "ghcr.io/prose-im/prose-web:v1.0.0"

}

}

}

}

Here, we’re looking at replacing v1.0.0 in the Docker image tag to a variable. Let’s call this variable IMAGE_TAG.

First, we’re modifying the Docker config so that it becomes:

config {

image = "ghcr.io/prose-im/prose-web:${IMAGE_TAG}"

}

Note that IMAGE_TAG is an environment variable in this context; it does not refer to a Nomad variable. We will need to define this environment variable somewhere, and tell Nomad to assign the environment variable value from an actual Nomad variable.

To achieve that, let’s add a template block and tell Nomad to source this file to our environment variables with env = true. This assigns the value of Nomad’s variable IMAGE_TAG at path nomad/jobs/prose-web to an environment variable of the same name:

# Those variables allow updating the deployed image from CI/CD

template {

data = <<EOH

IMAGE_TAG="{{ with nomadVar "nomad/jobs/prose-web" }}{{ .IMAGE_TAG }}{{ end }}"

EOH

destination = "local/run.env"

env = true

}

Perfect! To summarize, our job file now looks like:

job "prose-web" {

type = "service"

group "prose-web" {

count = 1

task "prose-web" {

driver = "docker"

# Those variables allow updating the deployed image from CI/CD

template {

data = <<EOH

IMAGE_TAG="{{ with nomadVar "nomad/jobs/prose-web" }}{{ .IMAGE_TAG }}{{ end }}"

EOH

destination = "local/run.env"

env = true

}

config {

image = "ghcr.io/prose-im/prose-web:${IMAGE_TAG}"

}

}

}

}

Now, create a Nomad environment variable named IMAGE_TAG, at path nomad/jobs/prose-web, with a value of latest (this will be updated later on to a version value when deploying, eg. v1.0.0).

Finally, submit your updated job definition to Nomad with:

nomad job run your-job.hcl

You should now observe your job update being rolled out, with Docker containers at tag latest being pulled on your Nomad client nodes.

If that worked, we can now proceed to the next step: automatically updating our newly-created IMAGE_TAG variable from our CI/CD pipeline (here: GitHub Actions). But first we have some preparation work to do.

2. Securely exposing Nomad API publicly

Before we proceed with GitHub Actions, we need to expose our Nomad API in a secure way, so that a Nomad CLI can administrate your Nomad control plane from the public Internet. This is required so that GitHub Action runners can hit your Nomad’s API.

Since I’m also running a NGINX load balancer on my Nomad cluster, I’ve simply added a admin.prose.org virtual host that proxies to my Nomad API:

# --- [admin.prose.org] ---

upstream host-nomad {

ip_hash;

# We are proxying to the host Nomad here (running on the same server, \

# since Nomad runs on the host system). The target port is local and is \

# not accessible by public users, for security reasons. This upstream is \

# therefore marked as 'host'.

server [::1]:4646 max_fails=0;

}

server {

listen [::]:443 ssl;

server_name admin.prose.org;

root /dev/null;

location / {

# Authenticate users, adding an extra layer of security on the top of \

# Nomad API, which may expose some public routes with no ACL.

auth_basic "Authenticate with your Proxy Auth keypair";

auth_basic_user_file htpasswd/admin;

proxy_pass http://host-nomad;

proxy_connect_timeout 5s;

proxy_read_timeout 600s;

proxy_send_timeout 10s;

proxy_http_version 1.1;

proxy_set_header Connection "upgrade";

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Early-Data $ssl_early_data;

# Do not buffer responses (for Nomad log streaming)

proxy_buffering off;

}

}

It is to be noted here that I’m running my load balancer job in Docker host network mode, so that it has access to my local loopback and can connect to Nomad’s API, running on the same server. If you’re running a multi-node Nomad cluster, with Nomad clients set apart from Nomad servers, you would have to connect over the LAN instead (modify the upstream block accordingly).

In my case, I needed to change the network_mode in my task/config block:

# Required to be able to see real servers/clients IPs

network_mode = "host"

Also, make sure to create a file named htpasswd/admin containing your HTTP Basic authentication username and password. I’d suggest reading NGINX’s guide on how to setup HTTP Basic authentication.

3. Create Nomad deployment user token and policy

We’re certainly not going to use our Nomad super-admin bootstrap token with GitHub Actions. We will need to setup a restricted Nomad policy and token.

Start by creating a new policy, that we’ll call deployer, in a file named deployer.policy.hcl:

namespace "default" {

policy = "deny"

variables {

path "nomad/jobs/prose-web" {

capabilities = ["write"]

}

}

}

This policy gives restricted write access to certain variable paths which you use for jobs that you want to deploy automatically. In our case, the nomad/jobs/prose-web path.

Then, import that policy to Nomad:

nomad acl policy apply deployer deployer.policy.hcl

Now, create a new token for your deployer policy:

nomad acl token create -name "GitHub Actions" -policy deployer

Take note of the token secret that was issued, we will set it up later on as a GitHub secret.

4. Updating a Nomad variable from GitHub Actions

Great! Now that our job file uses a Nomad variable to specify the Docker image tag, and that our Nomad API is publicly accessible, we can automate the image tag update from our GitHub Actions pipeline. That’s the easy step.

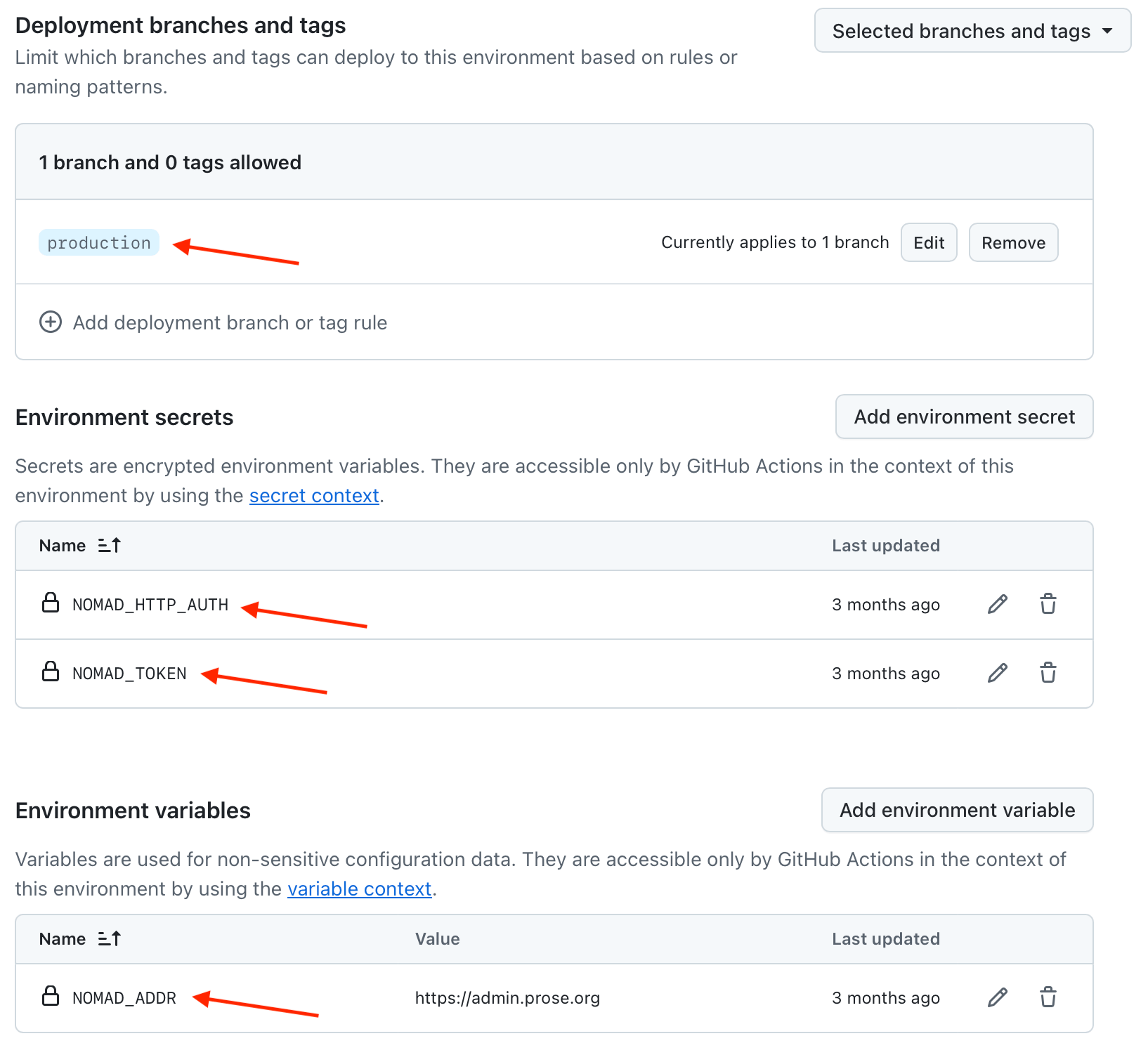

Configuring GitHub secret variables

First thing is to setup secrets containing our Nomad API token and our HTTP Basic authentication username/password. We also need to create a regular variable containing our Nomad API URL.

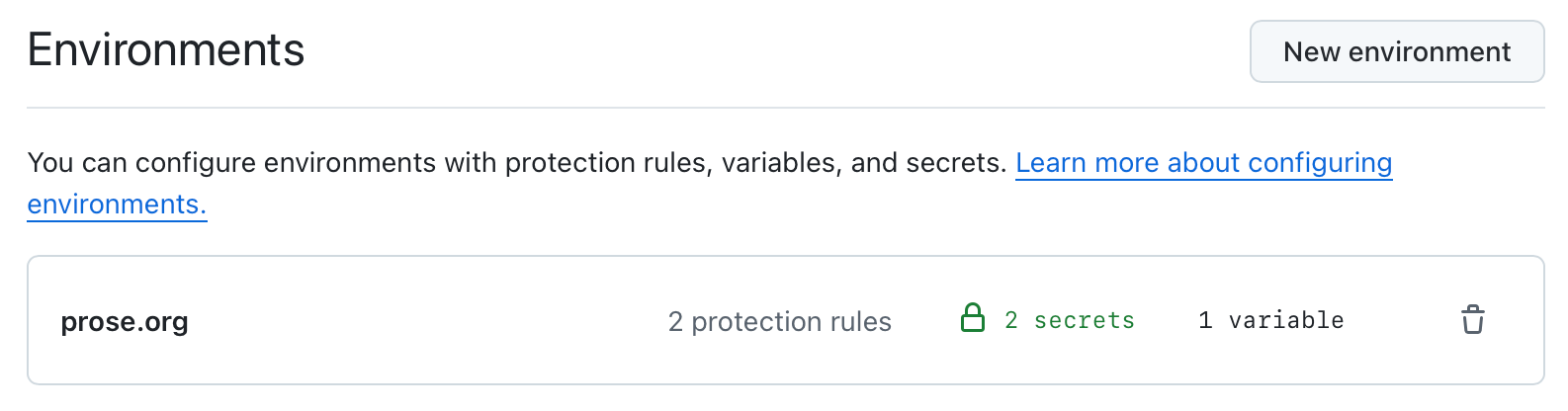

For improved security, I’ve chosen to setup a GitHub Environment, so that only GitHub Action jobs triggered on the production Git branch have access to my secret variables.

Supposing you’re also using the production branch for your deployments, an environment can be easily created in your GitHub project settings:

Setting up a deployment GitHub Actions step

The final step, is to update our existing GitHub Actions workflow and add a new deploy step, following an existing build step that would publish our Docker image to GitHub’s container registry:

on:

push:

branches:

- production

name: Build and Deploy

jobs:

build:

# (previous job step here, to release Docker image)

deploy:

needs: [build]

environment: prose.org

runs-on: ubuntu-latest

steps:

- name: Install Nomad

uses: hashicorp/setup-nomad@v1.0.0

- name: Request deployment to Nomad

env:

NOMAD_ADDR: ${{ vars.NOMAD_ADDR }}

NOMAD_TOKEN: ${{ secrets.NOMAD_TOKEN }}

NOMAD_HTTP_AUTH: ${{ secrets.NOMAD_HTTP_AUTH }}

run: |

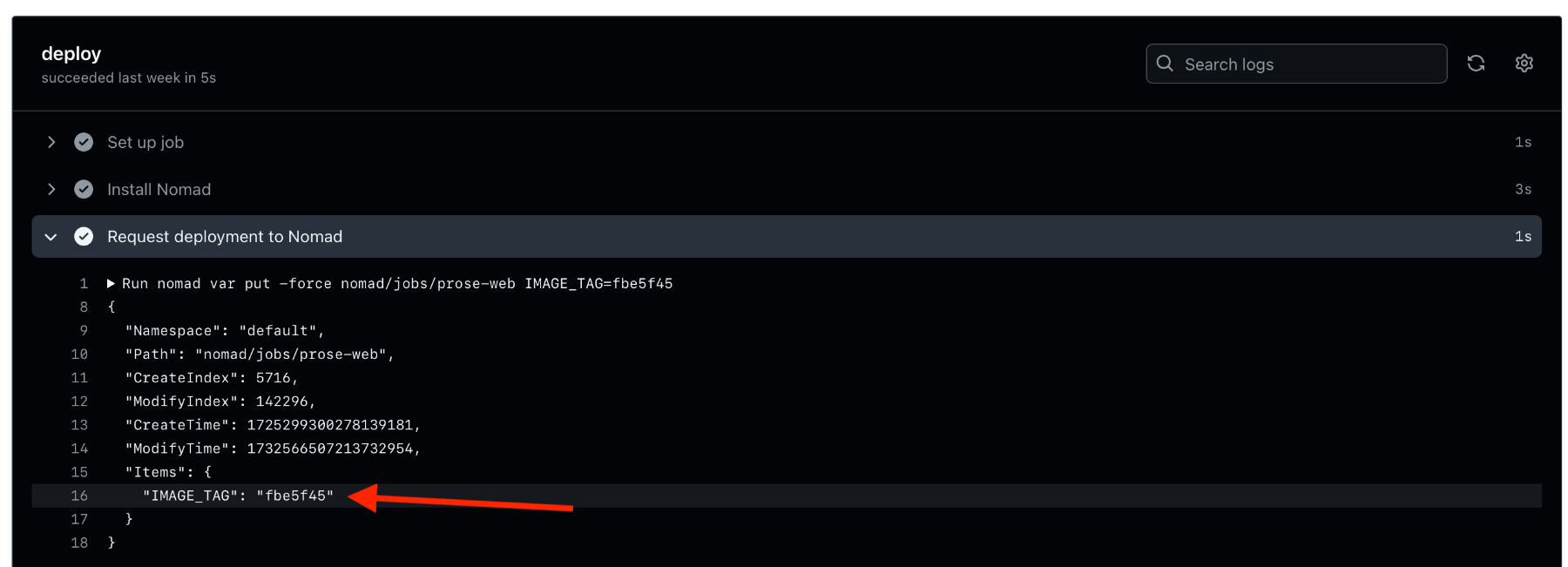

nomad var put -force nomad/jobs/prose-web IMAGE_TAG=${{ needs.build.outputs.revision }}

The following environment variables should be sourced from your GitHub secrets and variables: NOMAD_ADDR, NOMAD_TOKEN and NOMAD_HTTP_AUTH. They will be used directly by Nomad CLI’s nomad command.

We’re using the following Nomad CLI command to force-update our IMAGE_TAG variable with our new Docker image tag coming out of our build step:

nomad var put -force nomad/jobs/prose-web IMAGE_TAG="$version_number"

Good! Now we can commit our workflow update to our master branch.

5. Test a deployment from GitHub Actions

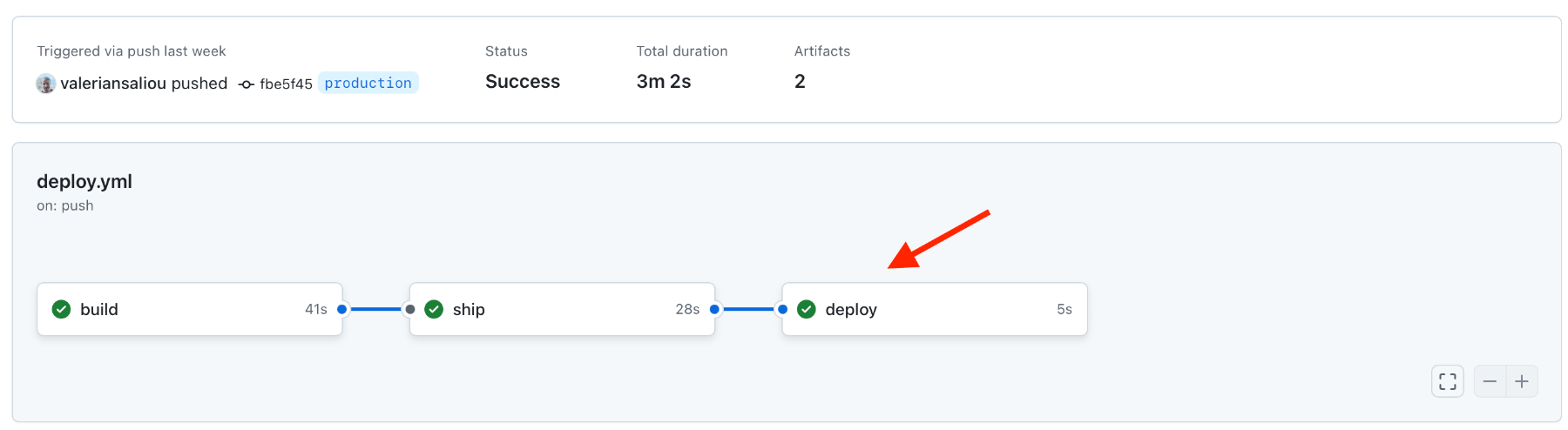

Let’s merge our work from master to production, and watch the magic happen:

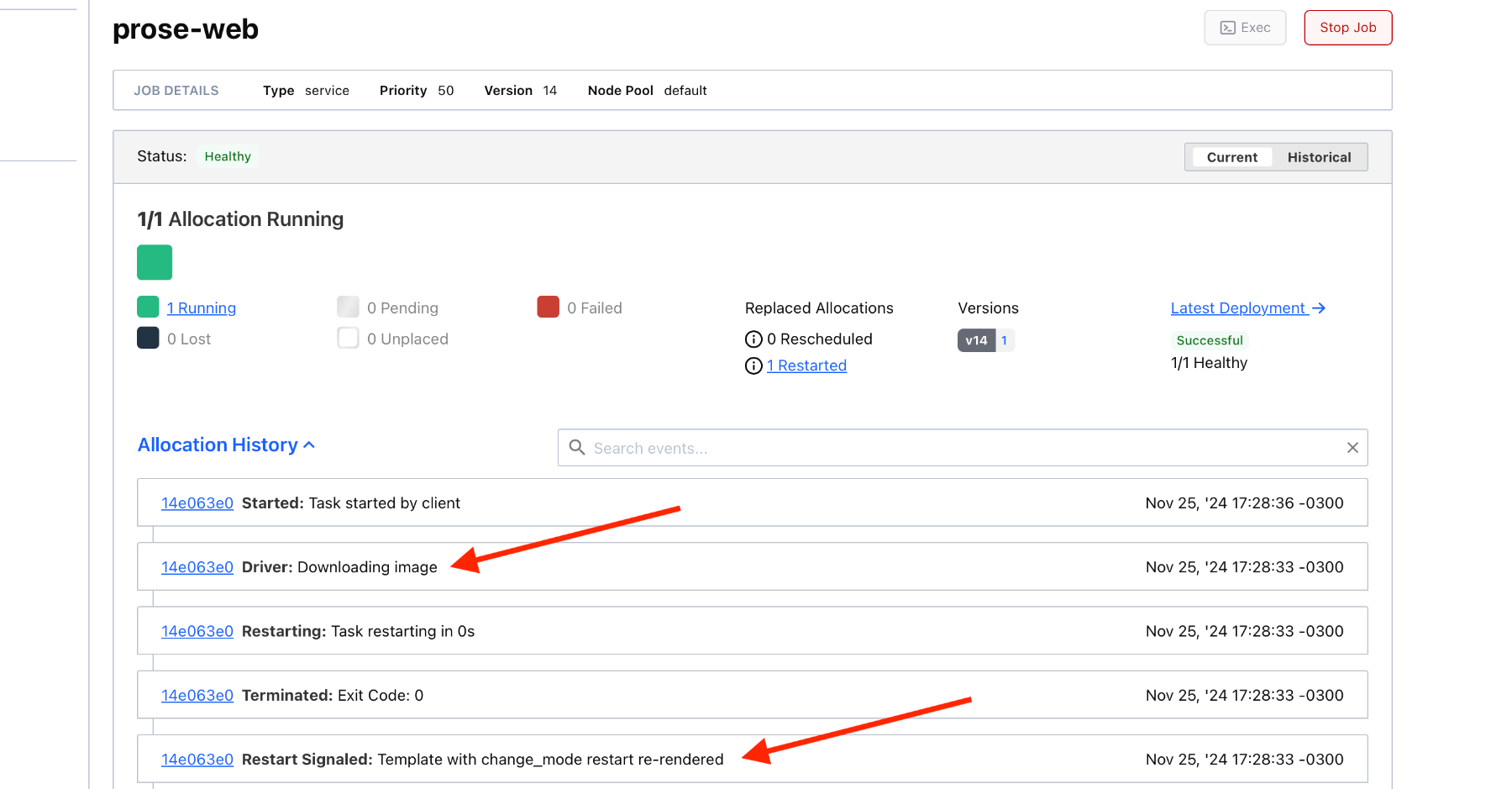

If you look at your Nomad Web UI, you should be seeing a job deployment getting triggered a few seconds after your deploy GitHub Actions completed:

Job done! 😃

6. Summary of steps

For more clarity, I’ve summarized all the steps we’ve taken in this article:

- Update your Nomad job definition so that the Docker image tag is sourced from an environment variable, itself sourced from a Nomad variable. Also, create this variable in Nomad and initialize it to a value of

latest. - Setup a HTTPS proxy to Nomad on a public host, it is also recommended to protect this with a HTTP Basic Auth password, in addition to your Nomad token.

- Create a Nomad token solely used by GitHub Actions, with a restricted policy to give it access to certain actions only (here, write Nomad variables for specified variable paths).

- Configure the Nomad token we’ve created, as well as your HTTP Basic authentication password, in your GitHub project secrets.

- Build your Docker image from a GitHub Actions pipeline, and release it to eg. GitHub packages repository.

- Add a deployment step that uses Nomad’s client to hit your Nomad API and update the image tag variable. This will trigger a job re-evaluation and result in Nomad updating your running containers.

🇦🇷 Written from Buenos Aires, Argentina.